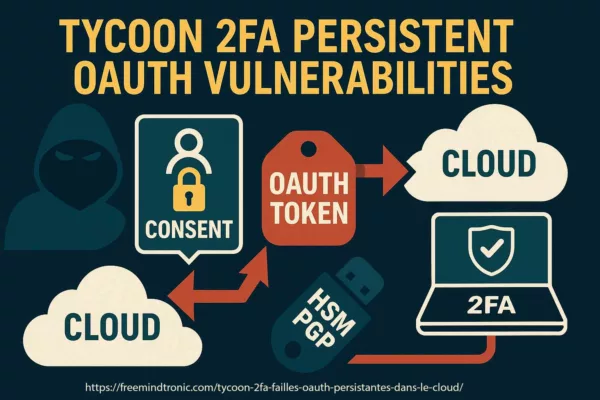

AI Mixpanel breach metadata is a blunt reminder of a simple rule: the moment sensitive telemetry leaves your perimeter and lands in third-party hands, your security story changes. In November 2025, no passwords, API keys, or prompts were exposed—but actionable metadata was. And for attackers, that’s often enough: it enables convincing, context-rich phishing and social-engineering campaigns aimed at the people who matter most—developers and teams running production API workloads. This chronicle retraces what happened, what it means, and where the red lines are—and explains why sovereign architectures like PassCypher HSM PGP and PassCypher NFC HSM matter when you want strong access protection without centralized databases.

Executive summary — What to remember from the AI / Mixpanel breach

This executive summary takes about 4 minutes to read. It covers the essential facts, the strategic impact and the key sovereign takeaways.

The AI / Mixpanel breach shows that even major AI players remain exposed when they outsource analytics of their usage. The November 2025 incident did not compromise passwords, API keys or prompts, but it did reveal sensitive metadata that can be used to precisely target developers and organisations using the API.

Principle — The third-party provider as a weak link

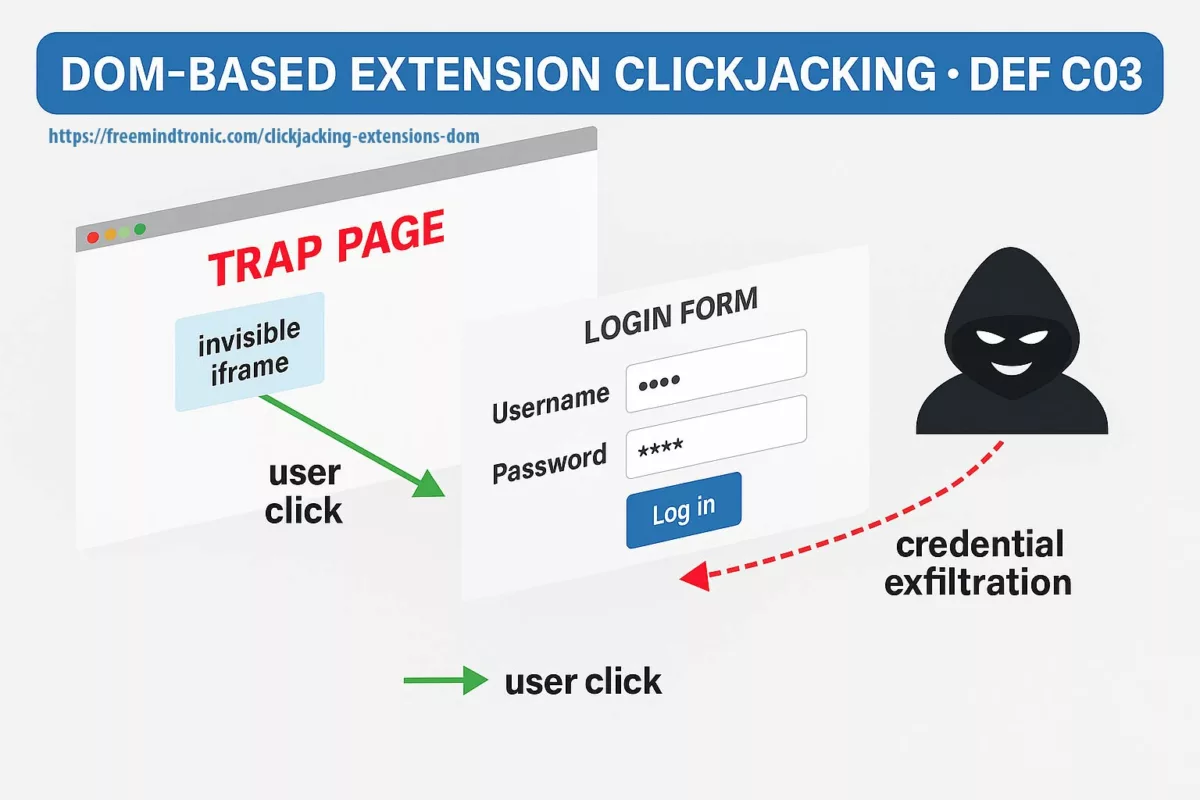

Mixpanel, a former analytics vendor for AI, collected and correlated usage data. Once its infrastructure was compromised, attackers were able to export metadata from API accounts: email addresses, account names, operating systems, browsers and approximate locations. For the end user, nothing changed on the surface. Behind the scenes, an indirect view of technical identities was being assembled.

Finding — Metadata as an attack vector

Even without passwords, this information makes it possible to build highly credible phishing and social-engineering campaigns: messages tailored to real usage patterns, roles, time zones and technical environments. Metadata turns into an industrialised attack lever.

Issue — Why was Mixpanel targeted?

The incident occurred in a context of tougher regulation and rapid enterprise adoption of generative AI. Targeting an analytics provider embedded at the heart of AI’s operational stack is equivalent to hitting a privileged observation point over high-value usage and profiles.

Sovereign issue — What this breach reveals for organisations

The AI / Mixpanel breach acts as a warning: the more a platform relies on third-party providers, the more it multiplies invisible attack surfaces. Protection does not come from stacking contract clauses, but from the architecture design itself: minimising stored data, strict compartmentalisation, offline HSMs and tight limitations on how identity metadata circulates.

Reading parameters

Quick summary: ≈ 4 min

Extended summary: ≈ 6 min

Full chronicle: ≈ 30 min

Publication date: 2025-11-29

Last update: 2025-11-29

Complexity level: Sovereign & Technical

Technical density: ≈ 68%

Languages available: FR · EN · ES · CAT

Main topics: AI, Mixpanel, metadata, phishing

Editorial type: Chronicle — Freemindtronic Cyberculture Series

Risk level: 7.9 / 10 — Sovereignty & data

TL;DR —

- The Mixpanel breach did not expose passwords or prompts, but it did leak identity metadata with high attack value.

- This metadata is enough to run metadata-driven phishing campaigns against AI API accounts.

- The incident exposes an illusion of control in architectures that depend on third-party analytics.

- Sovereign approaches based on offline HSMs and no centralised databases make a “local Mixpanel” impossible.

- PassCypher HSM PGP and NFC HSM implement a model where secrets never live in the cloud.

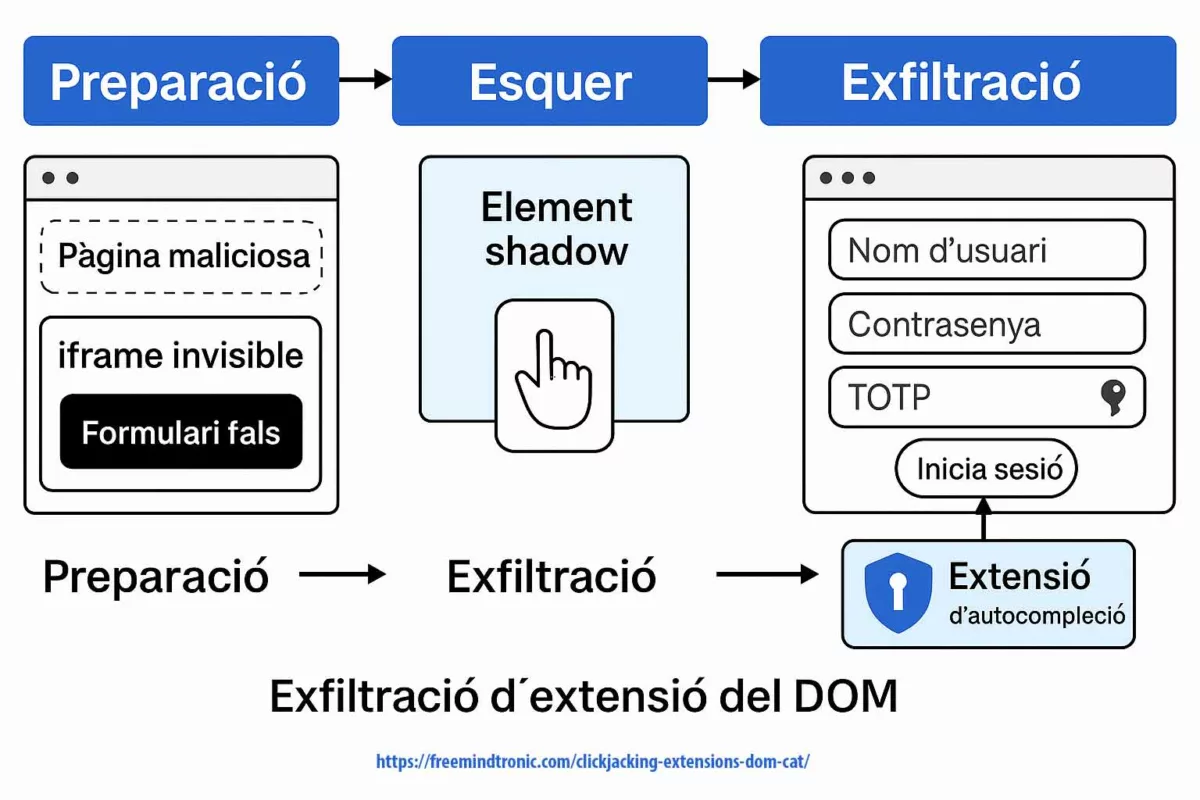

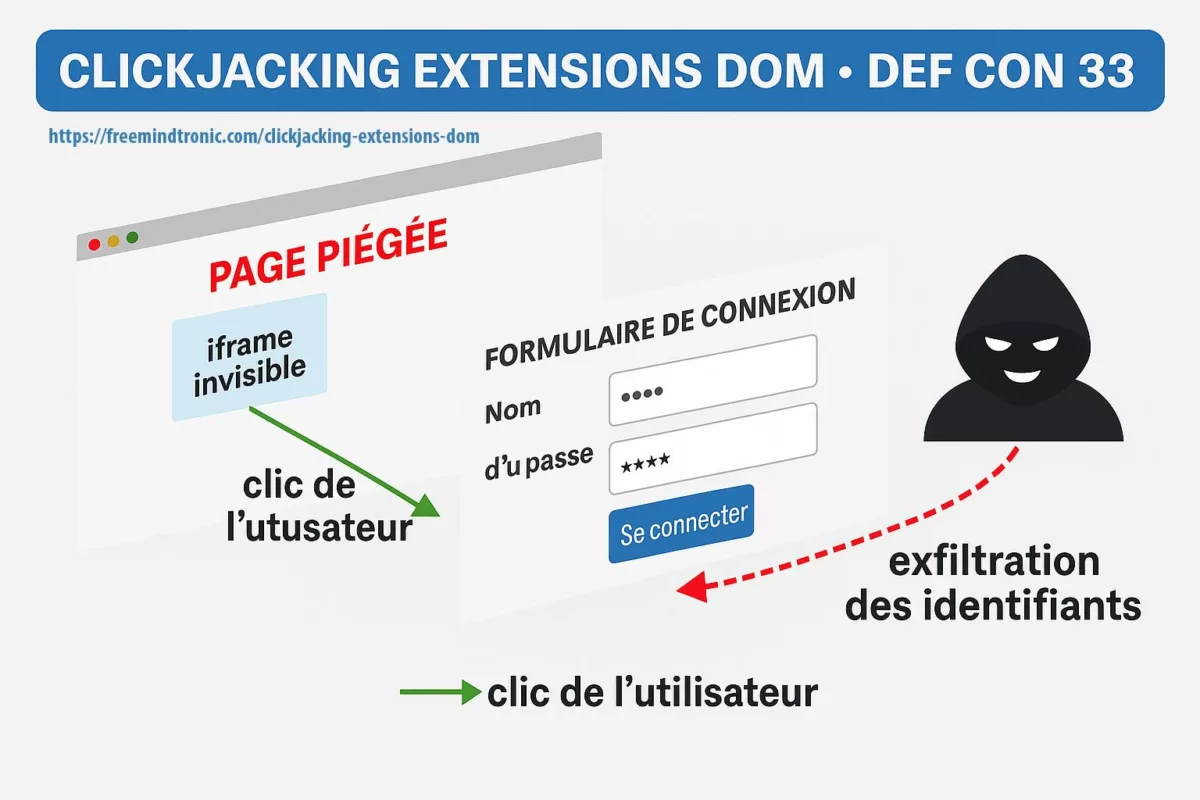

Threat pattern — “Metadata-leak phishing” in 3 moves

- Recon: leaked telemetry identifies who uses AI (org name, role hints, timezone, OS/browser fingerprints).

- Pretext: attacker sends a context-matching message (“API billing migration”, “key rotation notice”, “suspicious login from your city”).

- Capture: BitB / look-alike login steals session + MFA/TOTP in real time.

⮞ Quick synthesis

The AI / Mixpanel breach is not a peripheral anomaly. It reveals a vulnerable ecosystem where dependency on third-party providers exposes high-value technical and relational identities. The question is not “why Mixpanel”, but: “why did critical metadata depend on an external analytics provider in the first place?”

Key insights — Lines of force

- The Mixpanel breach illustrates the fragility of external dependencies in AI architectures.

- The exposed metadata is enough to build credible, targeted phishing attacks.

- The trust rupture is significant for developers and enterprises using the API.

- AI incidents (2023, 2024, 2025) show a systemic pattern around third-party services and centralised architectures.

- Only sovereign architectures (HSMs, local encryption, no centralised databases) prevent the emergence of a “local Mixpanel”.

- Executive summary — What to remember from the AI / Mixpanel breach

- Key insights — Lines of force

- Extended summary — OpenAI / Mixpanel breach, illusion of control and a red line for centralised architectures

- Chronicle — AI / Mixpanel breach and sovereign architectures

- Impact & statistics — AI Mixpanel breach and the real reach of the metadata leak

- What should I do if I am impacted by the AI Mixpanel breach?

- CVE & vulnerability context — A breach without CVE but rich in lessons

- Past incidents — AI breach timeline and recurring weaknesses

- From the Mixpanel breach to sovereign security — HSM architectures and database-free models

- Why PassCypher’s architecture makes a “local Mixpanel” impossible

- Demo video — Sovereign AI / ChatGPT login with PassCypher HSM PGP

- Final comparison — Classic login vs sovereign login facing an AI metadata breach

- FAQ — AI Mixpanel breach & data sovereignty

- Mini-glossary

- Strategic outlook — After the AI Mixpanel breach metadata, which sovereign path?

- Official sources — Understanding the AI Mixpanel breach at the source

- What we didn’t cover in the AI Mixpanel breach metadata

Extended summary — AI/Mixpanel breach metadata, illusion of control and a red line for centralised architectures

Extended reading ≈ 6 min — The AI / Mixpanel breach reveals a deep contradiction: major AI platforms claim to fully control their environment, yet in practice they rely on a constellation of third-party analytics providers

for analytics, monitoring, billing and application security. For years, critical metadata derived from API usage flowed through Mixpanel. The 2025 incident shows that even without content leaks or cryptographic secrets, trust can collapse as soon as metadata governance slips out of the platform’s hands.

Principle — The third-party provider as Achilles’ heel

Mixpanel does not invent analytics; it becomes the aggregation surface where weak usage signals converge: endpoints used, technical environments, organisational structures, geographies. Once compromised, this type of provider turns into a privileged sensor used to map the most interesting targets.

Finding — Metadata as a weapon

Attackers do not need direct access to prompts or API keys. Metadata is enough to craft bespoke phishing campaigns: fake AI security messages, abnormal-usage alerts, fake billing migrations or forced key-rotation notices. When those messages are grounded in real usage context, their effectiveness increases dramatically.

Issue — Why Mixpanel, and why now?

The incident comes at a time when organisations are industrialising generative-AI use and regulators are paying more attention to data-processing supply chains. An analytics provider becomes a perfect observation post to erode trust in the central platform while appearing “peripheral”.

Sovereign issue — What the breach shows other players

The AI / Mixpanel breach functions as a demonstration: the more platforms depend on third-party providers, the more they expose their users to systemic risks. Lasting protection depends on redesigning the architecture itself: data minimisation, compartmentalisation, HSMs for identity and secrets, and a drastic reduction in metadata footprints.

⮞ Extended synthesis

The AI / Mixpanel breach is not an isolated glitch. It exposes the limits of a declared sovereignty weakened by heavy reliance on third-party providers. The structural question now is: “how do we design systems that no longer create Mixpanels — neither external, nor internal?”

The chronicles displayed above ↑ belong to the Digital Security section. They extend the analysis of sovereign architectures, data black markets and surveillance tools. This selection complements the present chronicle dedicated to the AI Mixpanel breach and the systemic risks linked to third-party providers.

Chronicle — OpenAI Mixpanel breach metadata and sovereign architectures

The Mixpanel breach wasn’t a simple technical glitch or a “small peripheral leak”. It exposed a structural weakness: major AI platforms claim they run perfectly controlled ecosystems, yet they actually depend on a chain of third-party providers that collect and accumulate sensitive analytics data.

Attackers didn’t steal prompts or API keys. They extracted identity and context metadata: email addresses, account names, operating systems, browsers, and approximate locations. That is enough to run surgical phishing campaigns against developers and organisations—targets far more valuable than a “consumer” account.

“Security by design” promises control, but the Mixpanel breach shows an illusion of sovereignty: users think they interact only with a central platform (AI), while invisible providers collect, correlate, and sometimes expose a large share of their digital footprint. This chronicle dissects that gap between perception and operational reality.

Impact & statistics — OpenAI Mixpanel breach and the real reach of the metadata leak

Strategic frame

First, after setting the strategic frame of the AI Mixpanel breach, we need to move down to the numbers. A data breach is not measured only by the number of exported rows: it is measured above all by the exploitable surface it offers attackers.

Affected population

In the case of the AI Mixpanel breach, the incident centered on analytics data linked to platform.ai.com and affected a limited number of API users. That matters, because this is where the stakes concentrate: production integrations, automation pipelines, and the people who administer high-impact access.

Incident details

- Scope: developers, technical teams and organisations using platform.ai.com with Mixpanel enabled.

- Data exposed: API account name, email address, account or organisation IDs, operating system, browser, approximate location (city / state / country), referring websites.

- Volume: exact number not publicly disclosed. AI speaks of a “limited number of API users” affected.

- Risks: highly targeted phishing campaigns, social-engineering attacks against technical administrators and decision-makers.

- Response: AI cut ties with Mixpanel, initiated a dataset audit, notified users and reiterated MFA best practices.

Summary table

| Element | Details (AI Mixpanel breach) |

|---|---|

| Detection date at Mixpanel | 9 November 2025 — unauthorised access to part of the infrastructure |

| Dataset handed to AI | 25 November 2025 — exposed dataset shared for analysis |

| Public disclosure window | Official AI statement on 26 November 2025; media coverage from 27 November 2025 |

| Accounts affected | API users (developer platform); no direct impact on standard ChatGPT consumer accounts |

| Type of data exposed | Identification and usage metadata: names, emails, OS, browser, location, referrers |

| Data not compromised | Passwords, API keys, prompts, request logs, payments, sensitive documents |

Final analysis

In practice, this AI Mixpanel breach may look “limited”: no passwords, no API keys, no prompts. However, for an attacker specialising in targeted phishing, such a dataset is a detailed map of high-value targets. This asymmetry between perceived severity and operational value must be integrated into any serious digital-sovereignty strategy.

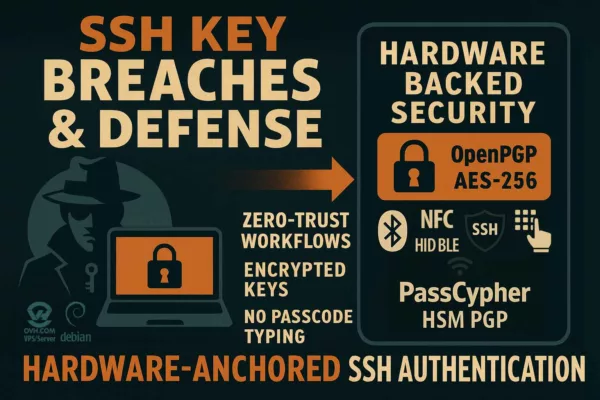

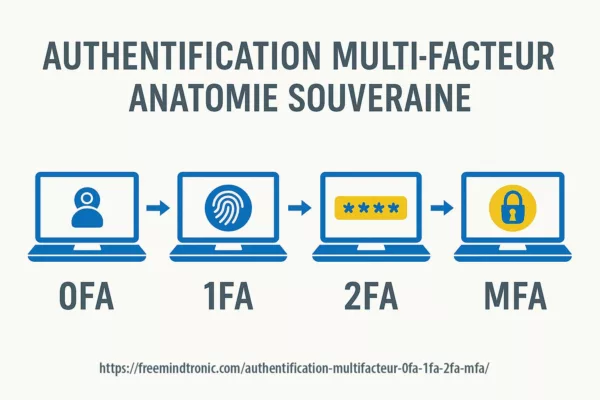

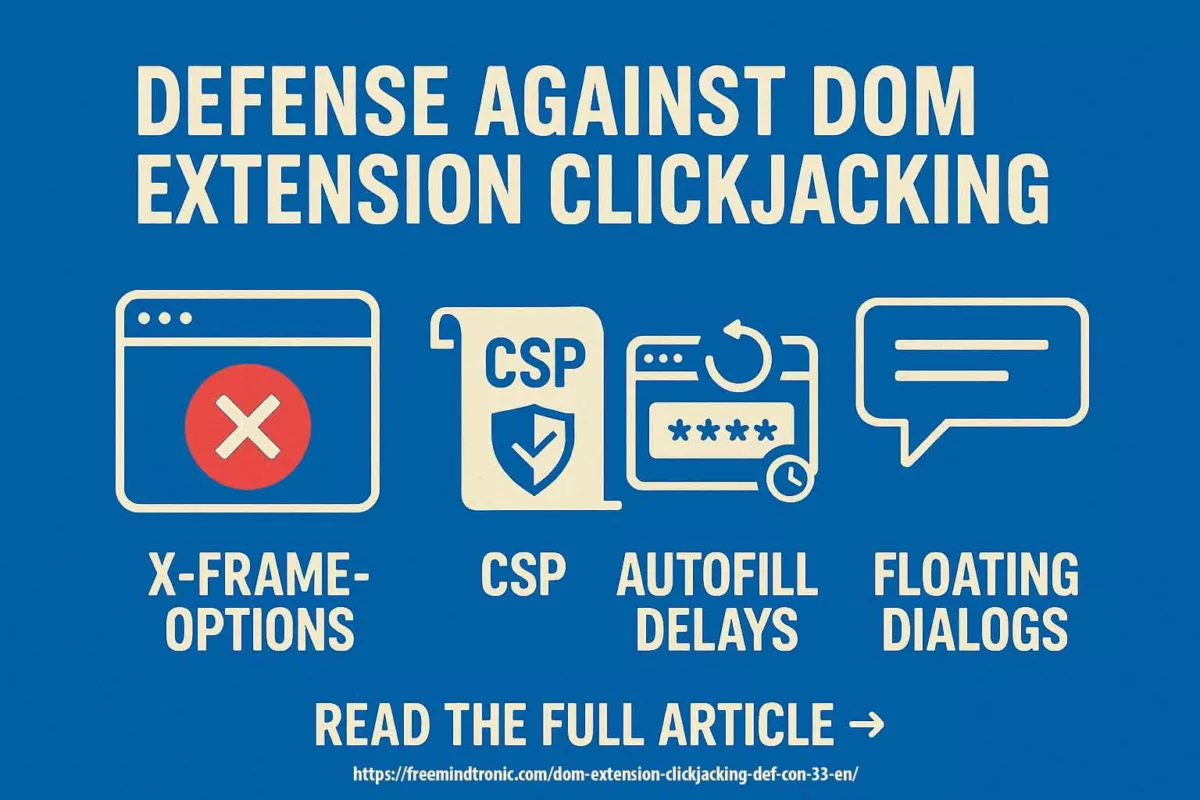

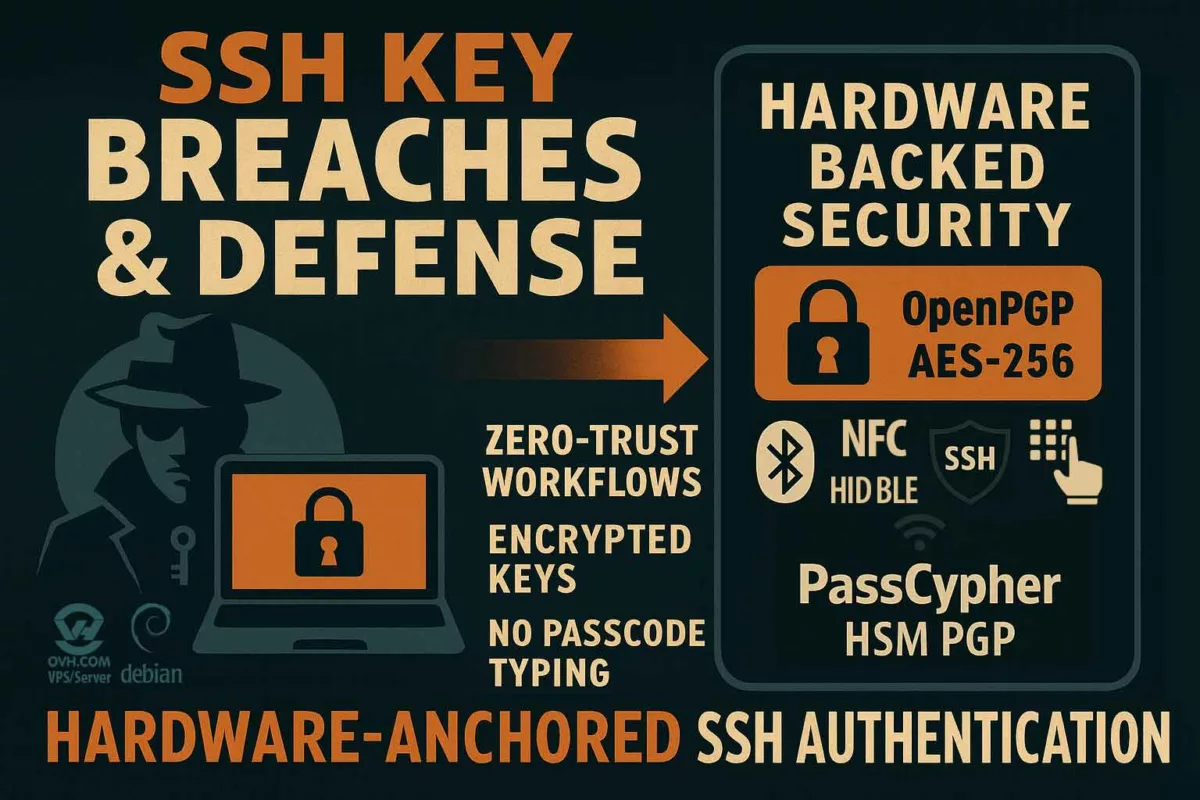

What should I do if I am impacted by the AI Mixpanel breach?

- Review API access: audit active keys, revoke unused ones, segment environments (production, test, R&D).

- Strengthen authentication: enforce MFA on all critical AI accounts, favour sovereign TOTP generators (HSM, PassCypher, etc.).

- Watch for suspicious emails: be especially wary of messages claiming to be “AI security alerts” or “billing updates”.

- Map your third-party providers: identify who sees what (analytics, monitoring, billing) and reduce metadata flows to the bare minimum.

- Start a sovereign trajectory: pilot local secret management with PassCypher HSM PGP or PassCypher NFC HSM on a limited perimeter.

Fast checklist — reduce vendor-risk blast radius in 15 minutes

- Freeze and review all third-party analytics tags on authentication and admin pages.

- Rotate high-privilege API keys; revoke unused tokens; enforce least privilege.

- Enable phishing-resistant MFA where possible; isolate TOTP generation from the browser context.

- Harden support workflows: verify “security” emails with out-of-band confirmation.

- Document your vendor risk map: who sees identity metadata, where it’s stored, how long it’s retained.

CVE & vulnerability context — A breach without CVE but rich in lessons

Once the quantified impact is clear, it is tempting to look for a vulnerability identifier to file the AI Mixpanel breach away. Yet this metadata leak does not fit the usual boxes: no CVE, no famous library exploit, no client-side patch to deploy.

The incident stems from an internal infrastructure compromise at Mixpanel, against a backdrop of smishing and social-engineering campaigns targeting employees. The outcome: a dataset export containing metadata of AI API accounts.

- No public CVE identifier associated with the incident.

- Nature of the attack: compromise of an analytics provider, not an exploit of AI’s code.

- Type of vulnerability: weaknesses in internal access management, session protection and smishing defences.

- Systemic effect: exposure of AI metadata that can power targeted campaigns.

| Aspect | Typical CVE-based incident | AI Mixpanel breach (third-party provider) |

|---|---|---|

| Main cause | Documented software bug (buffer overflow, injection, etc.) | Compromise of a third-party provider via social engineering |

| Reference | Public CVE ID | No CVE — incident described in incident posts and reports |

| Remediation | Software patch, version upgrade | Partnership termination, secrets rotation, supplier audit |

| Risk surface | Targeted software component | The whole analytics toolchain and metadata leakage |

In other words, the AI Mixpanel breach metadata reminds us that not all major failures are catalogued in a CVE database. Architectures based on third-party providers can be fully patched yet remain vulnerable at the organisational level. This is precisely where HSM PGP and NFC HSM approaches make sense: they reduce the exploitable value of such metadata by moving critical secrets out of reach.

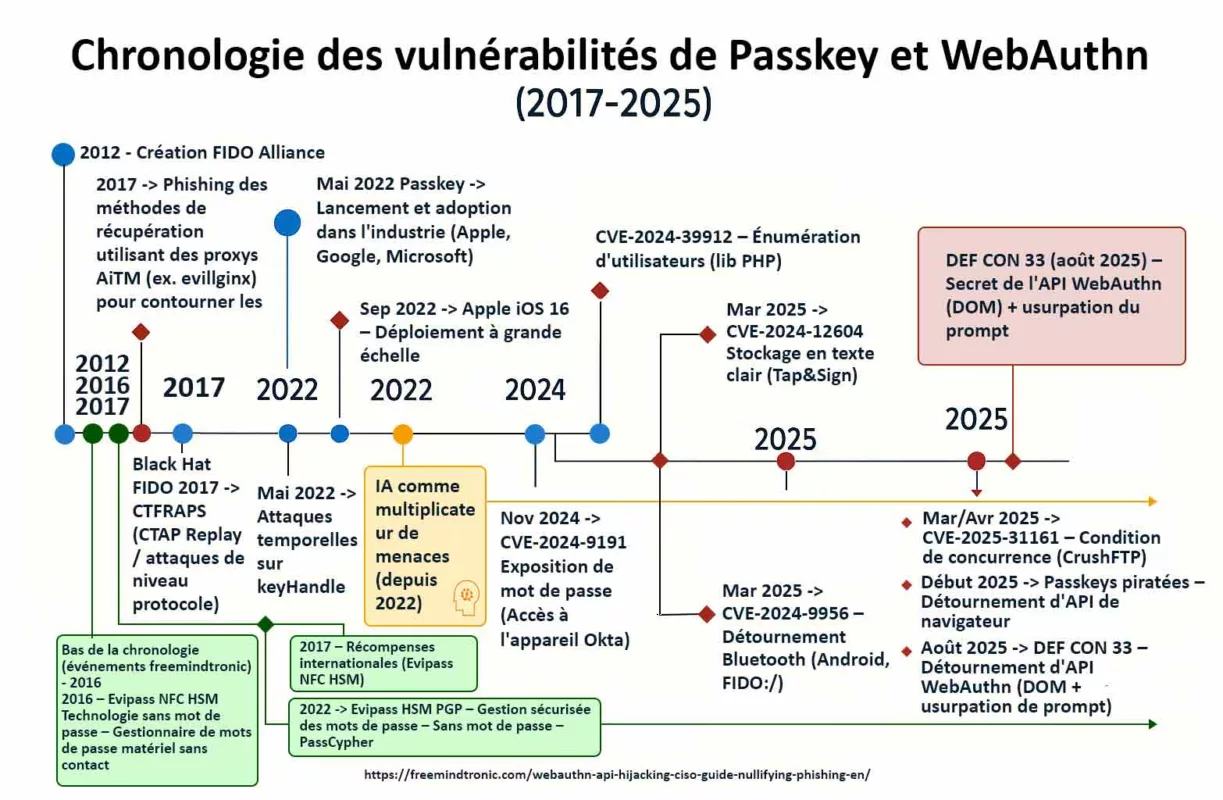

Past incidents — OpenAI breach timeline and recurring weaknesses

The AI Mixpanel breach did not come out of nowhere. It fits into a series of incidents that, taken individually, might be described as “limited”, but that together form a worrying pattern in terms of digital sovereignty.

- March 2023 — Redis bug exposing chat histories and payment information via ChatGPT.

- 2024 — API vulnerabilities enabling indirect exfiltration of prompts and contextual data.

- November 2025 — AI Mixpanel breach exposing metadata of API users (names, emails, OS, location).

In each of these cases, the AI data leak involved different components: database engine, API, analytics provider. Yet the result is always the same: fragmented trust and a boost in attackers’ leverage.

Weak signals — Before the break

Even before the AI Mixpanel breach, several weak signals were visible: multiplication of providers in the toolchain, lack of transparency around analytics, absence of a sovereign model for authentication and secret management. The Mixpanel incident simply turned these signals into a major warning.

This cumulative context is what makes a shift towards models such as PassCypher HSM PGP and PassCypher NFC HSM so relevant: they aim to break dependence on centralised databases and analytics providers by bringing secrets back into a perimeter where we can genuinely talk about digital sovereignty.

From the Mixpanel breach to sovereign security — HSM architectures and database-free models

The timeline of AI incidents shows that fixing issues one by one is no longer enough. The AI Mixpanel breach reminds us that even when the platform core stays intact, a simple metadata leak can power highly effective attacks. What is needed is a change of paradigm, not just a change of provider.

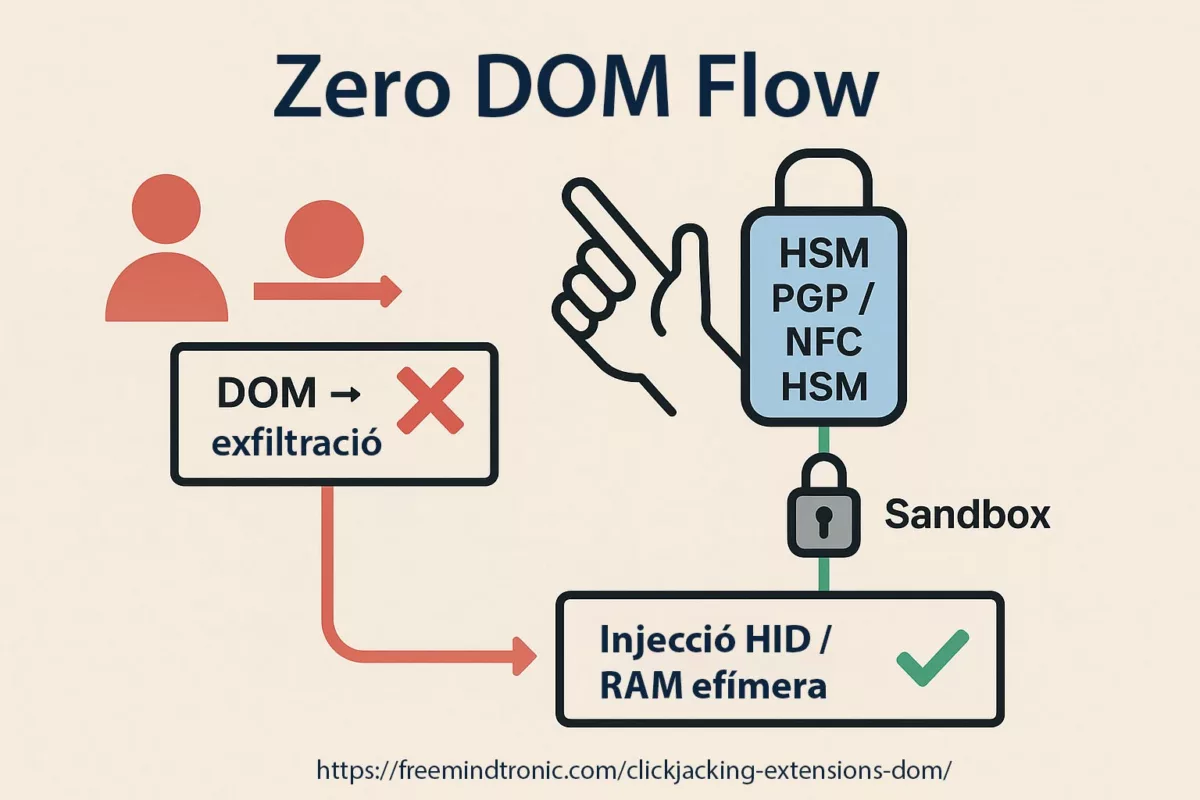

A sovereign model is built on a handful of simple but demanding principles:

- Secrets never stored in centralised databases or at cloud providers.

- Local encryption and offline HSMs, as implemented in PassCypher HSM PGP and PassCypher NFC HSM.

- Phishing neutralised at the root through sandboxed TOTP and strong, hardware-based authentication.

- Metadata minimisation for any analytics shared externally — or no third-party analytics at all for secrets.

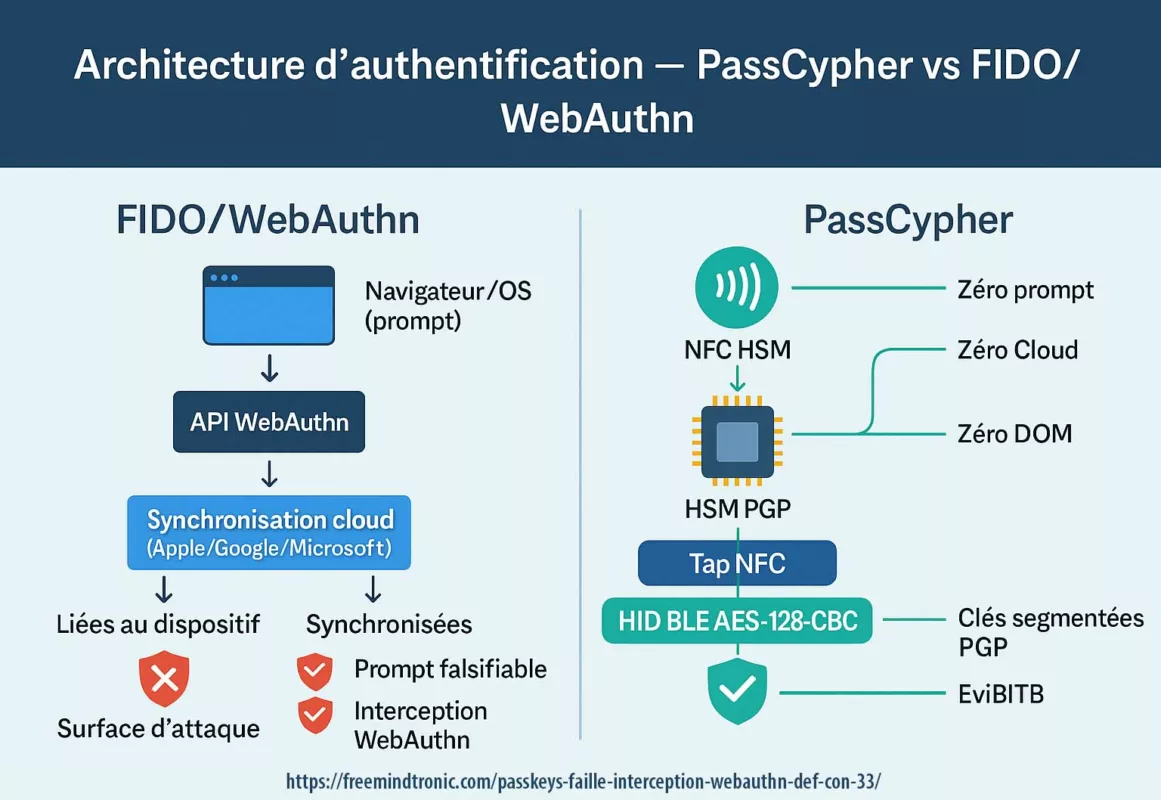

Where the AI metadata leak reveals the limits of centralised architectures, PassCypher offers a model where risk is contained upstream: even if an analytics provider were compromised, it would not gain access to any real secret, nor to sufficiently rich identities to build dedicated attacks.

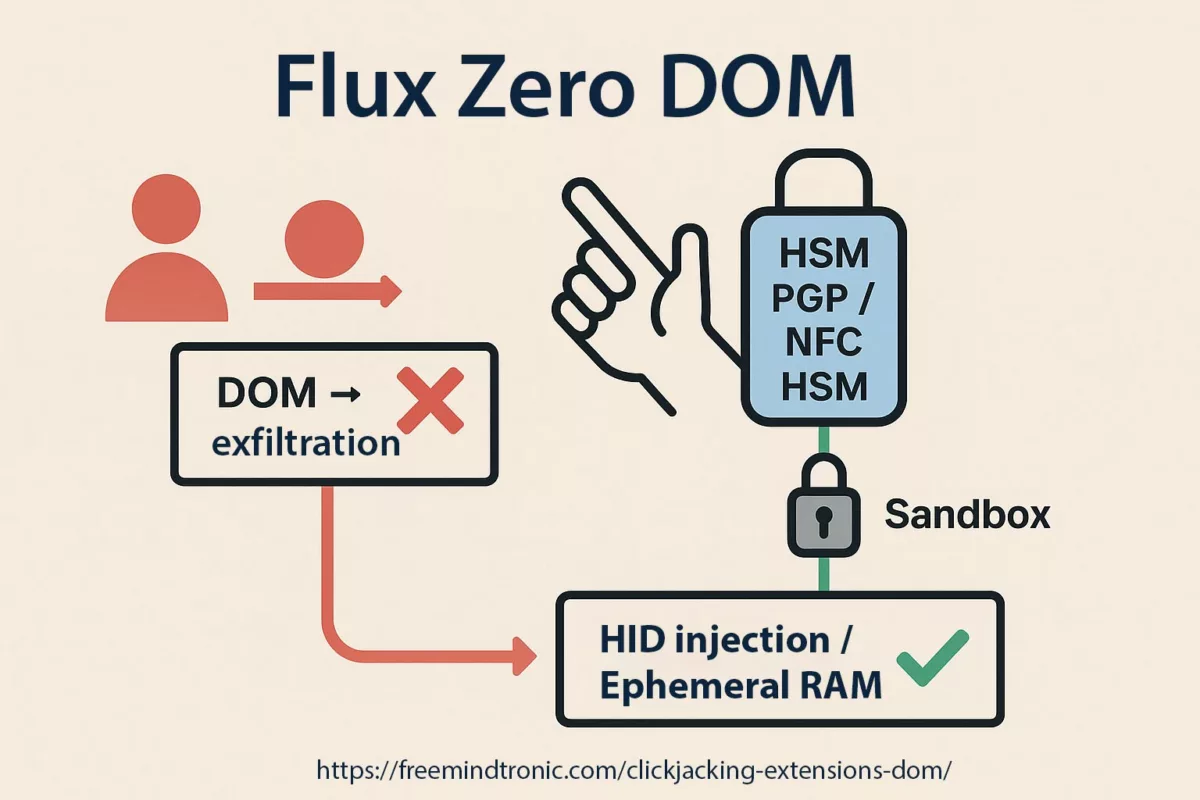

Why PassCypher’s architecture makes a “local Mixpanel” impossible

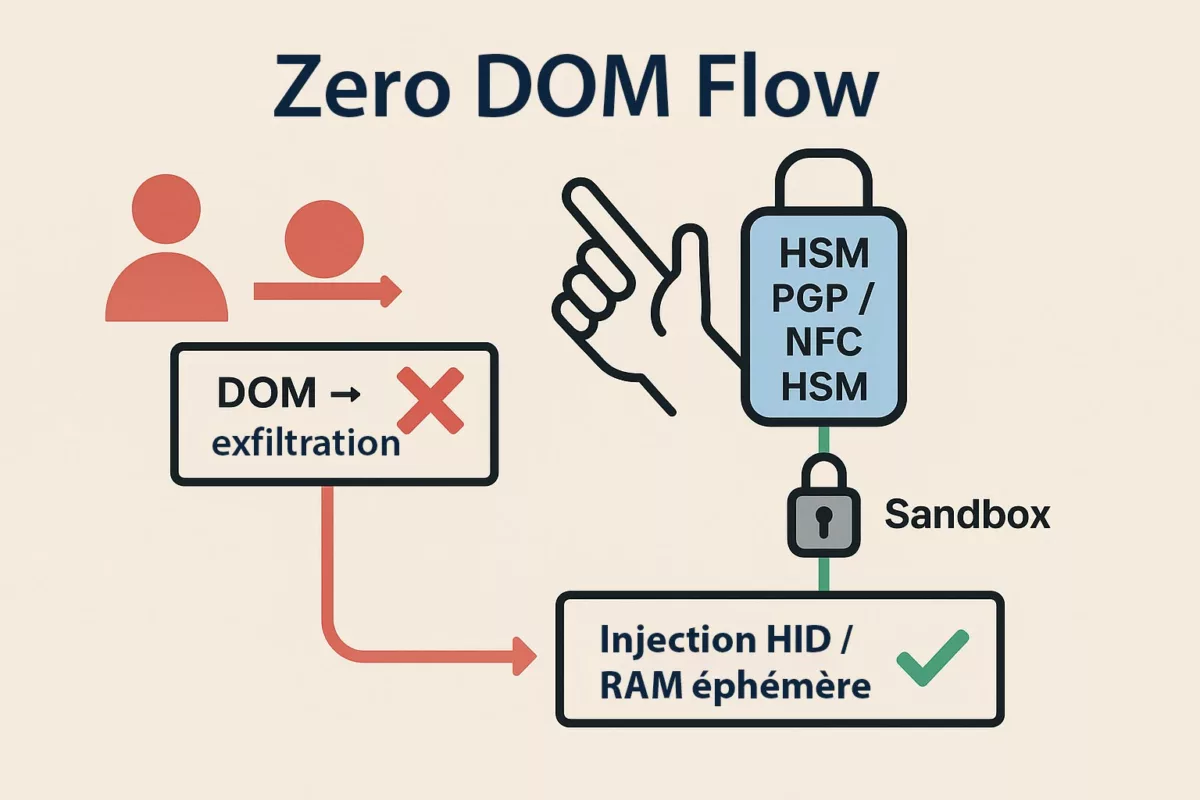

One of the core lessons from the AI Mixpanel breach metadata is that a leak does not need to contain passwords to become a lever for mass attacks. It only needs to expose identity, usage and context metadata that feed phishing and social engineering. This is exactly the class of attacks that the PassCypher HSM PGP architecture eliminates at the root.

Unlike centralised solutions that depend on third-party providers, PassCypher relies on no cloud, no backend, no datastore. Its operation structurally removes the vectors that made the AI data leak via Mixpanel possible:

- No server: no remote service able to collect or correlate metadata.

- No database: no exploitable footprint, no user profile to compromise.

- No master password: no single point of total compromise, no credential that can be phished once and reused everywhere.

- Containers always encrypted: secrets are never mounted in clear text, even locally.

- Decryption only in volatile memory: never on disk, never persistent, never exfiltrable at rest.

- Split decryption key: no single part unlocks the data; the full key only exists in RAM at runtime.

This approach guarantees that secrets remain undecryptable outside the HSM and that using PassCypher generates no exploitable metadata for any third party. Where the AI Mixpanel breach exposes the consequences of a centralised architecture, PassCypher proposes a model where digital sovereignty is not declarative but material and operational.

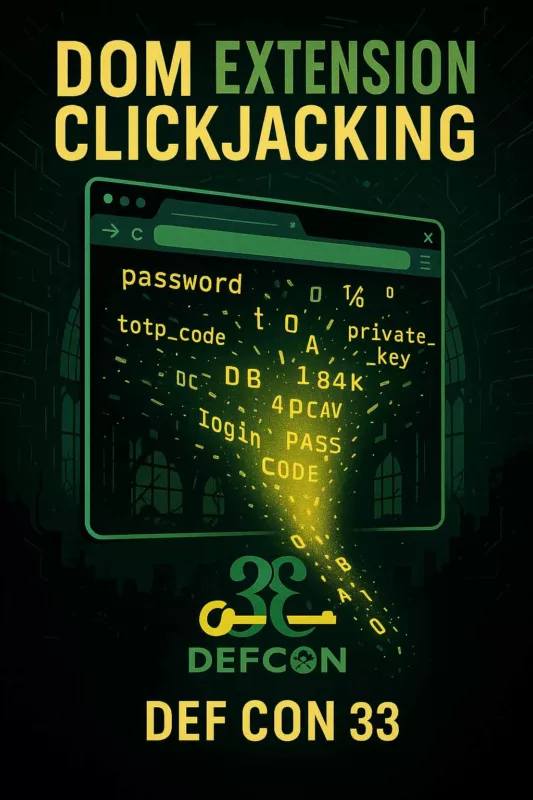

Industry signal — External recognition of sovereign architectures

In 2026, PassCypher was selected as a finalist for Best Cybersecurity Solution at the Intersec Awards 2026, an international event focused on security, resilience and critical infrastructure protection.

This recognition does not replace technical proof. However, it confirms that sovereign, offline, database-free security models—once considered niche—are now gaining institutional and industry-level attention in response to systemic risks exposed by incidents such as the AI Mixpanel metadata breach.

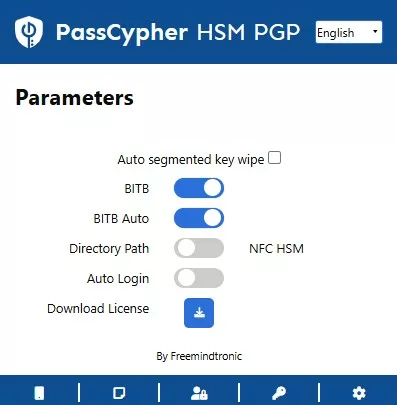

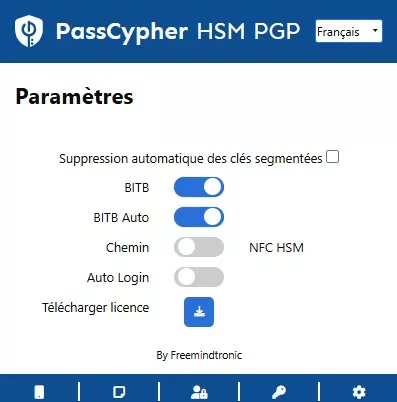

Demo video — Sovereign AI / ChatGPT login with PassCypher HSM PGP

After analysing how an AI metadata leak at a provider like Mixpanel can fuel highly targeted phishing and social-engineering attacks, this video presents Freemindtronic’s concrete answer: a sovereign connection to AI / ChatGPT orchestrated by PassCypher HSM PGP, with no room for data-leak scenarios or fake login screens.

AI / ChatGPT in 3 clicks: ID → Password → TOTP PIN

The demo shows a real AI / ChatGPT login in a standard browser and secures it with an HSM-based architecture:

- Click 1 — Identifier: PassCypher HSM PGP retrieves the login identifier from the HSM, decrypts it, and injects it—without ever storing it in a centralised database or in the cloud.

- Click 2 — Password: PassCypher HSM PGP manages the AI password locally, protects it with the HSM, and inserts it in a single action. No data flows through any analytics provider.

- Click 3 — TOTP PIN: PassCypher HSM PGP generates the one-time code inside a dedicated TOTP sandbox and injects it into the 2FA field. The whole process takes less than four seconds, with no manual typing and no copy-paste.

Attackers can use AI Mixpanel breach metadata to mimic a login environment and trick users. PassCypher reduces human error at the source: the user clicks, the HSM orchestrates, and no sensitive entry travels in clear text.

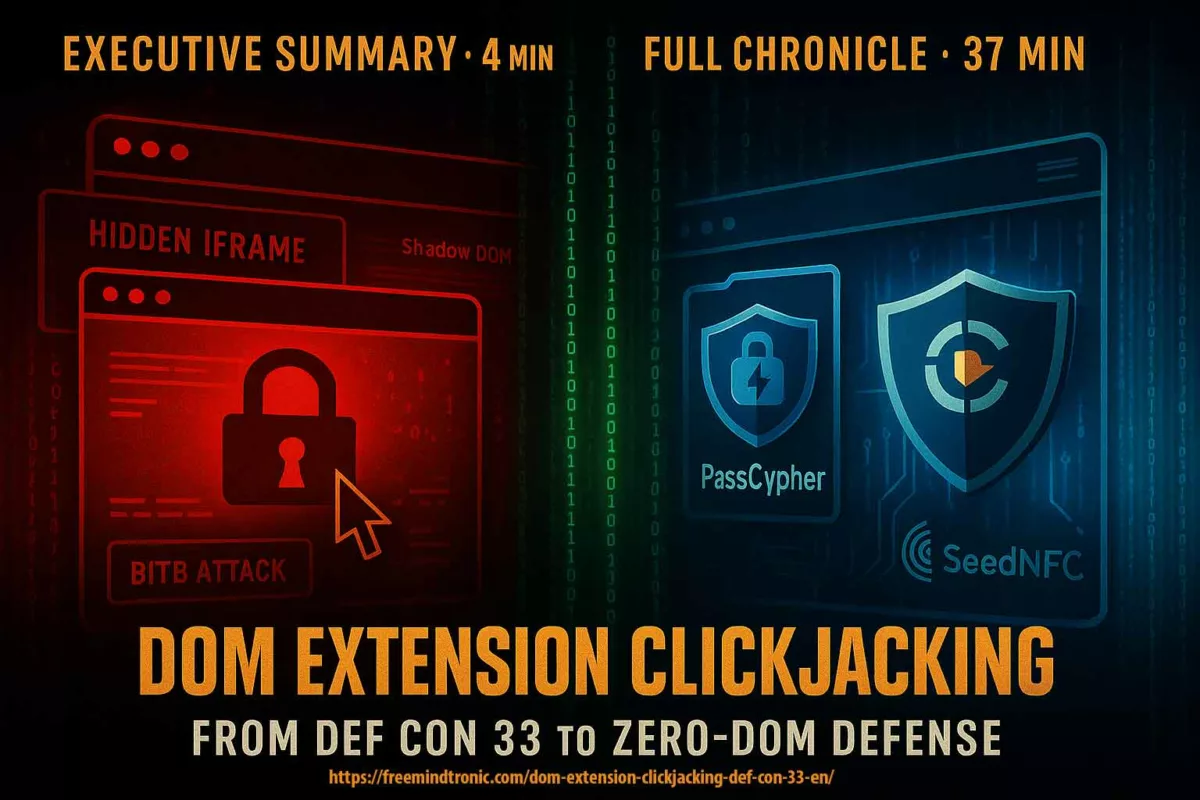

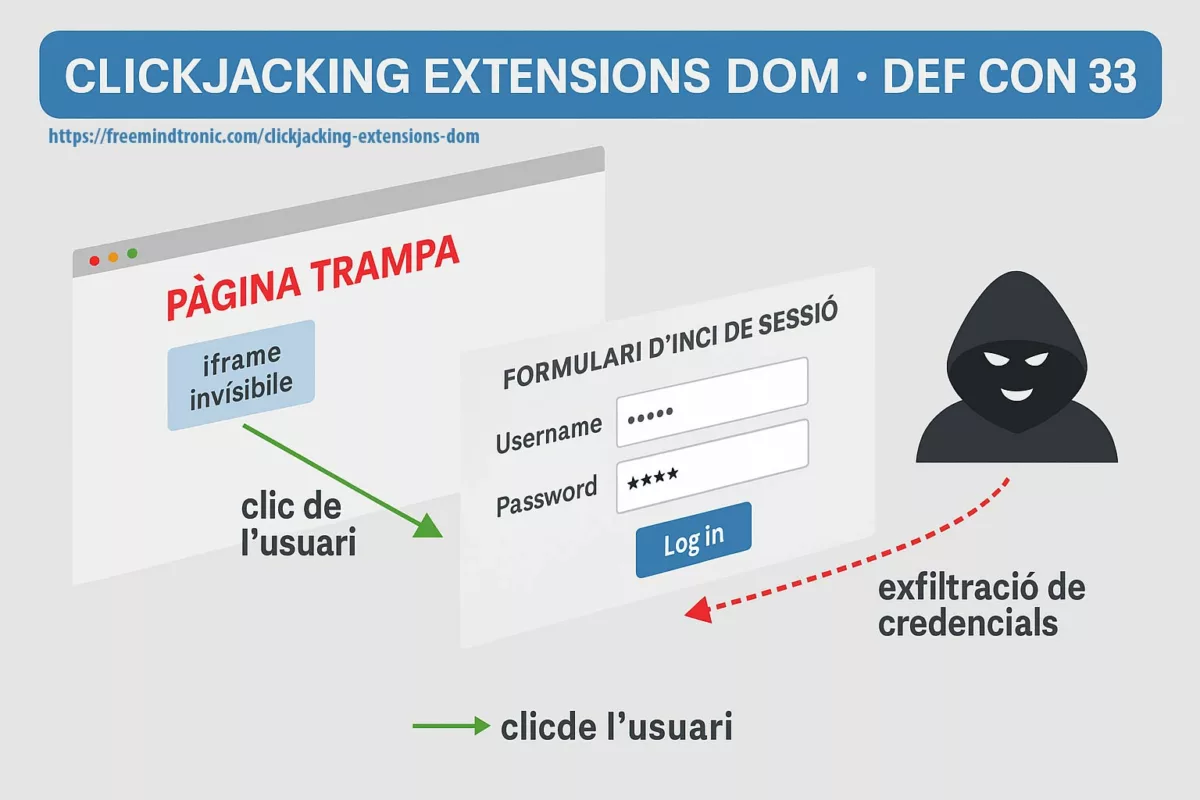

Full anti-phishing stack: URL sandbox, anti-BitB, anti-pwned

The video doesn’t just demonstrate a fast login. It also shows protections that directly address the risks revealed by the AI metadata leak:

- URL sandbox anti-phishing: before any autofill, PassCypher HSM PGP checks the real URL inside a sandbox. It detects and blocks redirections and look-alike domains.

- Anti-BitB (Browser-in-the-Browser) protection: PassCypher HSM PGP identifies fake pop-ups or fake login windows (a “browser” simulated inside the page). If the environment does not match the HSM sandbox, it refuses to inject secrets.

- Automatic “pwned” check: at each step (identifier, password), PassCypher HSM PGP checks whether the data appears in known dumps or compromised datasets. If it detects “pwned” risk, it warns the user before use.

- TOTP sandbox & URL binding: PassCypher HSM PGP generates and injects TOTP in an isolated space bound to the verified URL. A phishing site or BitB window cannot reuse the code.

By combining these mechanisms, PassCypher HSM PGP changes the game: even when attackers get an AI Mixpanel breach metadata dataset with detailed target information, classic phishing, BitB, and password-reuse scenarios stop working.

From demo to digital sovereignty

This video goes beyond a technical how-to. It proves you can secure AI / ChatGPT access without adopting the centralisation logic behind the original AI metadata breach:

- Attackers can’t target PassCypher servers because PassCypher runs locally: the HSM stays on-prem.

- Attackers can’t exfiltrate a central password database because none exists.

- Analytics providers never see secret flows because the architecture does not rely on them.

- Your AI / ChatGPT login stays safe even if new metadata leaks appear.

The Mixpanel model highlights the limits of centralised architectures and third-party providers. The PassCypher HSM PGP demo turns digital sovereignty into a tangible gesture: three clicks, under four seconds, zero secret exposed.

Sovereign AI / ChatGPT login in 3 clicks with PassCypher HSM PGP (ID, password, TOTP PIN), with no secrets stored in the cloud.

PassCypher HSM PGP licensing model — Sovereign usage, predictable cost

The video also addresses a point many digital-sovereignty discussions overlook: the licensing model. Many cloud-security solutions bill “per user” or “per account” and add yet another dependency on third-party providers. PassCypher HSM PGP takes the opposite route.

PassCypher ties the licence to the PassCypher Engine and binds it to hardware (motherboard serial number), not to user identity.

Concretely:

- PassCypher lets multiple users share a single licence on the same machine.

- PassCypher turns the workstation into the sovereign anchor of security, not yet another cloud account.

- PassCypher keeps usage conditions clear: hourly, daily, weekly, monthly or annual licence, up to 1 year or 2 years at €199 depending on the chosen plan.

This choice does more than optimise costs. It aligns the licensing model with the doctrine behind the response to the AI Mixpanel breach metadata: less centralised data, less dependency on providers, more local control. Where metadata leaks feed phishing and exploitable “profiles”, the PassCypher Engine turns the machine into a clear, controlled boundary—without creating another exploitable user database.

Final comparison — Classic login vs sovereign login facing an AI metadata breach

After describing the attack, its context and its impacts, it helps to visualise the concrete consequences of a metadata leak in two opposing architectures: the cloud-centric model built on centralised databases, and the sovereign model centred on local HSMs.

| Aspect | Classic (centralised) login | Sovereign login (PassCypher HSM / NFC HSM) |

|---|---|---|

| Secret storage | Servers and central databases at the provider and its vendors | Secrets never stored in a database; only in offline HSMs |

| Effect of an AI Mixpanel breach | Exposure of identities exploitable to reset or steal accounts | Limited metadata, impossible to act without the physical HSM |

| Phishing vector | Emails and forms easily mimicked with credible look-alikes | Sandboxed TOTP and signed flows shrink the phishing surface |

| Resilience to third-party providers | Strong dependency on analytics, identity services and clouds | Local architecture, reinforced digital sovereignty, few or no critical providers |

| Post-quantum readiness | Often partial or postponed | Cryptography and models designed for long-term durability, quantum-resilient compatibility |

| Overall trust | Fragile, heavily dependent on the subcontracting chain | Reinforced by the absence of centralised databases and mass-leak scenarios |

Visually, the difference is clear: in a centralised model, a single metadata leak at a provider like Mixpanel can trigger a crisis of trust. In a sovereign model based on PassCypher HSM PGP and NFC HSM, the attacker runs into a wall: they lack the physical element needed to turn the AI metadata breach into a successful attack.

FAQ — OpenAI Mixpanel breach & data sovereignty

Did the Mixpanel breach expose passwords, API keys or prompts?

Data actually exposed

First, it’s important to note that the OpenAI Mixpanel breach did not compromise passwords, API keys or prompts. However, sensitive metadata (emails, operating systems, browsers, approximate locations) was exported. In practice, this is enough to prepare targeted attacks, even if the leak may look “limited” at first glance.

Why is metadata dangerous?

Risks linked to metadata

Metadata can be used to build highly credible phishing and social-engineering campaigns. An attacker can tailor messages to time zones, technical environments or victims’ roles. As a result, an OpenAI data leak limited to metadata is still sufficient to trick developers or administrators.

Is there a CVE associated with the OpenAI Mixpanel breach?

Incident classification

Unlike other documented issues, there is no CVE identifier associated with the OpenAI Mixpanel breach. It is an internal infrastructure compromise at Mixpanel, not a traditional software vulnerability. This underlines that risks come not only from code, but also from third-party providers and their governance.

What other security incidents affected OpenAI before Mixpanel?

Incident timeline

- 2023 — Redis bug exposing chat conversations and payment data.

- 2024 — API vulnerabilities enabling indirect exfiltration of prompts.

- 2025 — OpenAI Mixpanel breach exposing API metadata.

Taken together, these incidents reveal a systemic recurrence linked to centralised architectures and external dependencies.

How can a government or company avoid creating a “local Mixpanel”?

Sovereignty strategy

Start by minimising stored data, segmenting environments and limiting reliance on external analytics providers. Then, entrust secrets to local HSMs rather than cloud databases. In short, do not recreate internally what you criticise in external vendors: that is the key to genuine digital sovereignty.

What role do PassCypher HSM PGP and PassCypher NFC HSM play?

Sovereign solutions

PassCypher HSM PGP and PassCypher NFC HSM embody this sovereign paradigm: no servers, no centralised password databases, no secrets stored in the cloud. Keys and identities remain in local HSMs, which drastically reduces the impact of an OpenAI Mixpanel breach or any similar incident.

What specific risks does this pose for developers and enterprises?

Priority targets

Developer forums and security experts agree that API accounts are the most exposed. These accounts provide access to critical systems, sensitive integrations and automations. A metadata leak can be used to mount technical phishing attacks or impersonate organisational identities.

What immediate actions did OpenAI take?

Official response

According to OpenAI’s official communication, the company immediately terminated its partnership with Mixpanel, audited affected datasets and notified API users. It also reiterated basic security measures, particularly MFA and vigilance against suspicious emails.

Mini-glossary

Metadata

Technical phishing

BitB (Browser-in-the-Browser)

Third-party provider

Vendor risk

Strategic outlook — After the AI Mixpanel breach metadata, which sovereign path?

A revealing turning point for AI platforms

The AI Mixpanel breach marks a major turning point. It doesn’t shock by the volume of leaked data; it hits the relationship between AI platforms, third-party providers, and professional users. A “simple” metadata leak can still crack trust across the entire ecosystem because it gives attackers enough context to target the right people with the right pretexts.

Strategic directions for states and enterprises

States and enterprises can take clear steps:

- Rethink outsourcing chains: map providers, reduce external analytics exposure, and enforce strict rules on metadata handling and retention.

- Generalise HSM-based approaches: deploy sovereign solutions such as PassCypher HSM PGP and PassCypher NFC HSM on critical accounts.

- Raise the bar for API access: adopt hardware-backed authentication, sandboxed TOTPs, and locally orchestrated secret rotation.

- Integrate digital sovereignty: treat it as an architecture requirement, not a compliance checkbox.

Centralisation vs. sovereignty

Another AI data leak or metadata breach will happen. The real question is where it lands. A centralised model concentrates risk and amplifies each incident. A sovereign model bounds impact and turns incidents into manageable events instead of systemic trust failures.

Freemindtronic doctrine: turning crisis into opportunity

Freemindtronic applies a simple doctrine: use every incident like the AI Mixpanel breach to strengthen digital sovereignty. It moves secrets, identities, and access toward HSM-based controls that stay out of reach—even when cloud providers stumble.

Want to go further?

Several chronicles in the Digital Security section explore these issues in depth: digital sovereignty, data black markets, and reliance on third-party providers.

Official sources — Understanding the AI Mixpanel breach at the source

- AI — What to know about a recent Mixpanel security incident

- Mixpanel — Our response to a recent security incident

- AI Blog — March 20 ChatGPT outage (Redis bug, 2023)

- SecurityWeek — AI API vulnerabilities (2024)

- Bitdefender — Mixpanel incident exposes limited API user data

- Proton — AI data breach: what happened and how to stay safe

These sources make it possible to cross-check the timeline, scope and exact nature of the AI / Mixpanel data breach, while confirming that passwords, prompts and payments were not compromised. They also underline the need to better control third-party providers and to reduce dependency on external analytics when digital sovereignty is at stake.

What we didn’t cover in the AI Mixpanel breach metadata

- The precise implications of international legal frameworks (GDPR, CLOUD Act, etc.) for this metadata breach.

- A full benchmark of all secret-management solutions competing with PassCypher HSM PGP and PassCypher NFC HSM.

- A detailed study of cyber-insurance strategies facing metadata leaks related to third-party providers.

- Long-term economic analyses of the cost of non-sovereignty for states and large groups.

These topics would deserve dedicated chronicles. Here, the goal was to focus on the direct link between the AI Mixpanel breach metadata and the design of sovereign security architectures, based on concrete solutions such as PassCypher HSM PGP and PassCypher NFC HSM.