2026, Digital Security

Zero-Knowledge Downgrade Attacks — Structural Risks

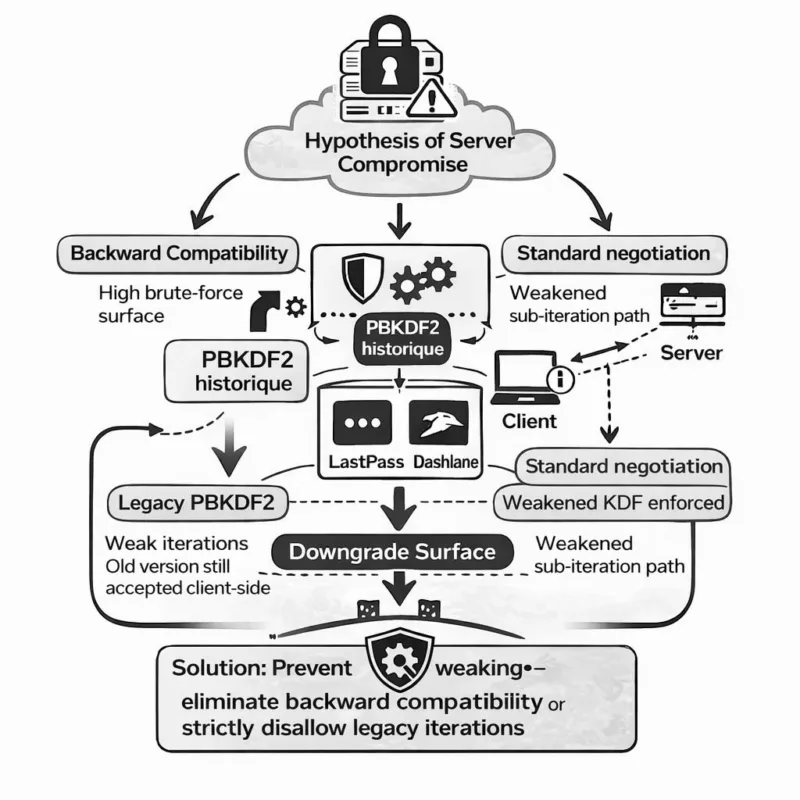

Zero-Knowledge Downgrade Attacks: downgrade paths against Bitwarden, LastPass, and Dashlane show how cryptographic backward compatibility can structurally weaken a zero-knowledge architecture. When KDF parameters can be influenced server-side, zero-knowledge becomes conditionally vulnerable under an active compromise assumption. This dossier explains why encryption primitives are not “broken”, but why parameter governance, version discipline, and cryptographic sovereignty matter beyond marketing. It distinguishes a theoretically sound zero-knowledge design from a zero-knowledge implementation made vulnerable by downgrade-capable choices. It also clarifies why architectures that remove server negotiation entirely—plus Freemindtronic’s patented offline segmented-key authentication mechanism developed in Andorra—cannot, by construction, fall within the downgrade perimeter studied here.

Executive Summary

Context

Academic researchers affiliated with ETH Zurich and USI reviewed the robustness of the zero-knowledge model in several major password managers. Their objective was not to defeat cryptographic primitives, but to test how downgrade attacks can exploit legacy compatibility layers.

Primary Finding

Zero-knowledge remains cryptographically valid. However, real-world resilience depends on strict governance of parameters and on preventing a compromised server from imposing degraded negotiation paths.

Scope

The study focuses on Bitwarden, LastPass, and Dashlane. It highlights how historical PBKDF2 configurations and backward compatibility can reduce brute-force resistance under a server-compromise assumption.

Doctrinal Implication

Zero-knowledge protects against passive compromise. It does not inherently protect against active protocol manipulation if the client accepts weakened parameters.

Strategic Differentiator

Security maturity is no longer measured only by “strong encryption.” It depends on KDF parameter governance, version discipline, and eliminating downgrade-capable negotiation paths. The debate shifts from “Is it encrypted?” to “Who controls the cryptographic floor?”

Essential Point

Zero-knowledge is not broken.

It becomes structurally fragile when backward compatibility allows a compromised server to enforce weaker KDF parameters.

True resilience requires strict client-side validation, a non-negotiable minimum cryptographic floor, and elimination of downgrade negotiation.

Technical Note

Reading time (summary): ~4 minutes

Full reading time: ~29–33 minutes

Publication date: 2026-02-18

Level: Cryptography / Audit / Security Architecture

Positioning: Zero-Knowledge Governance & Downgrade Resilience

Category: Digital Security

Languages available: · FR · EN

Impact level: 9.1 / 10 — trust model & cryptographic governance

In cybersecurity and digital architecture, this analysis belongs to the Digital Security category available in the slider above ↑. It continues Freemindtronic’s work on cryptographic sovereignty, user control, and resilient architectures.

- Executive Summary

- Why Zero-Knowledge Can Become Vulnerable

- Downgrade Attacks: Technical Mechanism

- Product-Level Analysis

- A Systemic Reading of “Vulnerable Zero-Knowledge”

- Threat Model

- Threat Model Summary

- Strategic Signals

- Strong Signal

- Medium Signal

- Weak Signal

- Limits & Clarifications

- User Impact

- What This Does Not Mean

- Structural Countermeasures

- Software Hardening

- Client-Side Validation

- Sovereign Architecture

- Architectural Comparison

- Sovereign Alternative

- Cryptographic Principle

- Segment Generation & Protection

- RAM-Only Encryption

- Patented Segmented Authentication

- Official References

- Doctrinal Evolution

- Strategic Perspective

- FAQ — Vulnerable Zero-Knowledge

- Glossary

Why Zero-Knowledge Can Become Vulnerable

Concise definition: A zero-knowledge model becomes vulnerable when a server can influence or degrade cryptographic parameters accepted by the client. The flaw does not affect the encryption algorithm itself, but the structural possibility of a downgrade negotiation that weakens real-world strength.

A zero-knowledge system becomes vulnerable when a server can influence the cryptographic parameters used by the client.

The vulnerability is not “broken encryption.” It is downgrade negotiation allowed by architecture.

The zero-knowledge model rests on a simple principle: the server never holds the decryption key.

In the ideal version:

- The key is derived from a master password via PBKDF2 / Argon2

- The server stores only encrypted data

- Decryption happens client-side

This model protects against:

- Malicious employees

- Passive server compromise

It does not protect against:

- Active protocol manipulation

- Downgrade to weaker parameters

Downgrade Attacks: Technical Mechanism

A downgrade attack forces an application to use an earlier, less robust version of a cryptographic protocol or parameter set.

In this study:

| Step | Description | Consequence |

|---|---|---|

| 1 | Server compromise | Attacker controls API responses |

| 2 | Legacy KDF parameters enforced | Weakened key derivation strength |

| 3 | Optimized brute-force | Credential recovery becomes cheaper |

Core problem: backward compatibility preserves historically weaker cryptographic settings.

Product-Level Analysis

Summary table:

| Password Manager | KDF Model | Downgrade Surface | Corrective Measures |

|---|---|---|---|

| Bitwarden | Configurable PBKDF2 | Legacy parameters can be activated | Higher iterations & minimum floors |

| LastPass | PBKDF2 (legacy accounts) | Old iteration counts on historical vaults | Forced migration & parameter uplift |

| Dashlane | Proprietary architecture | Older-generation compatibility | Progressive hardening |

Important: Researchers informed vendors before publication. The identified weaknesses led to hardening measures (iteration increases, minimum floors, migration guidance). However, downgrade surface linked to backward compatibility cannot be fully removed without breaking access for certain legacy vaults. This structural constraint is about maintaining legacy access, not failing cryptography.

The academic study covers only three password managers: Bitwarden, LastPass and Dashlane. Together they account for tens of millions of users worldwide. However, conclusions should not be generalized to all zero-knowledge implementations. The research demonstrates a structural risk under an active server compromise assumption, not a publicly confirmed mass exploitation event.

A Systemic Reading of “Vulnerable Zero-Knowledge”

This case reveals three structural tensions:

- Innovation vs backward compatibility

- Zero-knowledge marketing vs operational reality

- Cloud security vs server dependency

Zero-knowledge is not “false.”

It is dependent on cryptographic governance.

As long as a server can impose parameters, it influences real-world strength. This does not mean every password manager is vulnerable. It highlights a general architectural tension in cloud-mediated zero-knowledge systems: backward compatibility vs strict enforcement of a non-negotiable cryptographic floor.

A “vulnerable zero-knowledge” system does not mean the algorithm is weak. It means the architecture still contains downgrade negotiation paths that become exploitable under specific conditions.

Threat Model for Vulnerable Zero-Knowledge

The studied scenario assumes no “magic break” in encryption. It relies on a structured assumption: active compromise of the server or partial control over API infrastructure.

In this architecture:

- The attacker controls server responses

- They can enforce historical KDF parameters

- The client accepts them if they are still considered valid

- Offline brute-force becomes economically easier

This is not a consumer opportunistic attack. It is a targeted post-compromise scenario requiring server control or active interception.

Key point: the risk appears only if the client accepts downgrade negotiation. If the client rejects weak parameters, the downgrade fails.

Threat Model Summary

| Condition | Required? | Impact |

|---|---|---|

| Server compromise | Yes | Weakened parameters can be enforced |

| Client acceptance | Yes | Brute-force surface opens |

| Mass exploitation | Not observed | Targeted scenario |

Strategic Signals of Vulnerable Zero-Knowledge

🔴 Strong Signal

- Backward compatibility becomes a structural attack surface.

- KDF parameter governance is now a strategic security issue.

- “Absolute zero-knowledge” marketing must account for negotiable parameters.

🟠 Medium Signal

- Legacy or weakly configured accounts are more exposed.

- Migration to Argon2id becomes a priority.

- Minimum iteration policies must be hardened.

🟢 Weak Signal

- No public large-scale exploitation has been observed.

- Vendors responded quickly and transparently.

- Cryptographic primitives remain solid.

Overall reading: this is not the collapse of zero-knowledge. It is a reminder that security depends on implementation discipline.

Limits & Clarifications

It is essential to define the perimeter precisely:

- The scenario relies on an active server compromise assumption.

- No public mass exploitation has been documented.

- Vendors hardened their parameter floors.

- Risk varies with the adopted threat model.

This research reveals a structural tension—not a generalized compromise of all zero-knowledge password managers.

Practical User Impact

For end users, risk depends primarily on account configuration and the hardening level in place.

Practical recommendations

- Check PBKDF2 iteration count or whether Argon2id is enabled.

- Increase the minimum cryptographic floor if configurable.

- Use a long, random master password.

- Update legacy accounts configured with historical parameters.

A correctly configured account using modern parameters drastically reduces downgrade risk in practice.

What This Does Not Mean

- AES is broken

- PBKDF2 is not broken as a standardized key derivation function.

- All zero-knowledge password managers are compromised

- A mass exploitation wave is underway

- Zero-knowledge is obsolete

Structural Countermeasures

In a vulnerable zero-knowledge scenario, the response is not only “stronger algorithms.” It is removing server-side negotiation surfaces.

Three mitigation layers:

1 — Software Hardening

- Enforce minimum parameters

- Disable legacy schemes

- Make Argon2id mandatory where possible

2 — Client-Side Validation

- Reject weak parameters

- Cryptographic version locking

3 — Sovereign Architecture

- Secrets outside the browser

- Offline HSM

- No server negotiation

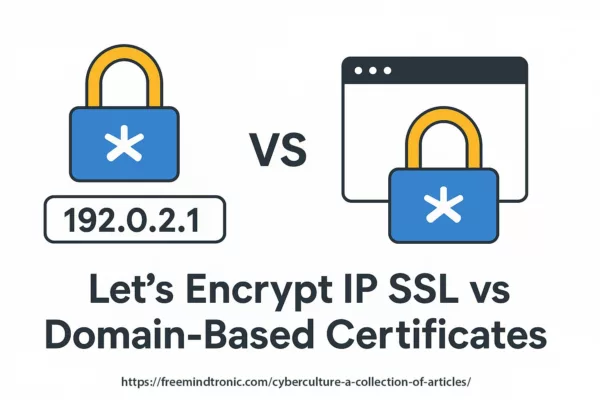

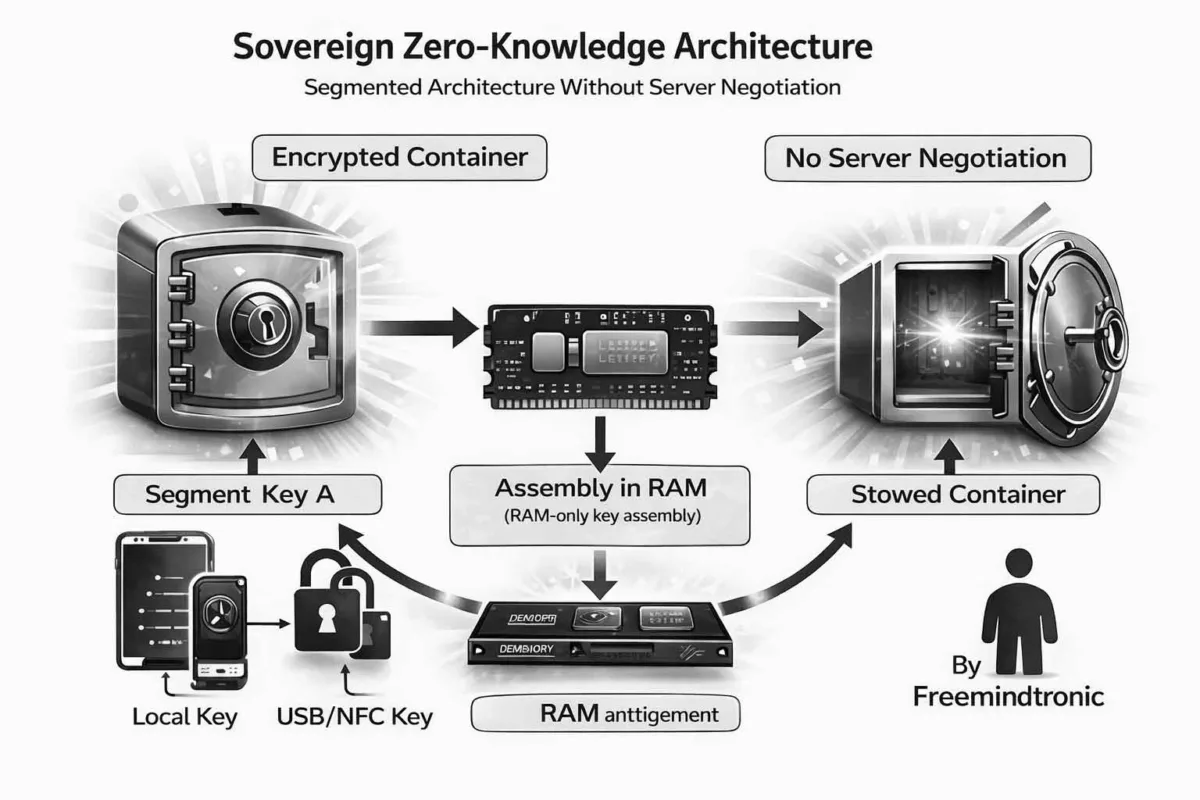

Architectural Comparison: Cloud Zero-Knowledge vs Segmented Architecture

| Criterion | Cloud Zero-Knowledge | PassCypher Segmented Architecture |

|---|---|---|

| Master key | Yes (master password) | No central master key |

| KDF derivation | Negotiable across versions | No server negotiation |

| Server dependency | Yes | No |

| Downgrade surface | Possible if backward compatibility exists | Non-existent |

| Cleartext persistence | Not intended but implementation-dependent | RAM-only |

| Single point of failure | Server / identity plane | None |

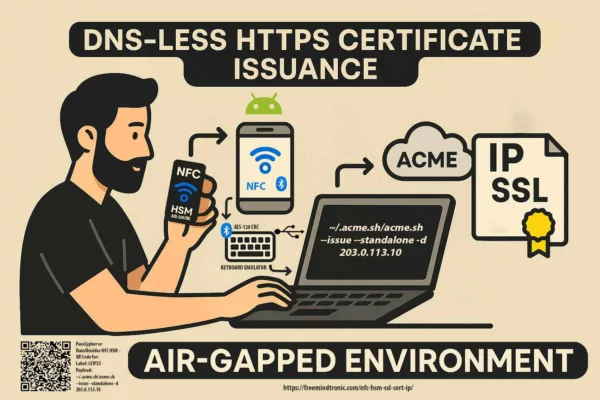

Sovereign Alternative: Centralization Without SSO & Segmented Keys

Unlike standard cloud architectures, PassCypher HSM PGP enables voluntary centralization without an identity server, without SSO, and without a global master key.

The principle relies on autonomous segmented keys combined at the user’s discretion.

Cryptographic Principle

Example:

- Two independent 256-bit key segments

- Stored separately (e.g., local storage + USB key)

- Assembled dynamically via concatenation

Concatenating two 256-bit keys yields:

2²⁵⁶ × 2²⁵⁶ = 2⁵¹² possibilities

≈ 1.34 × 10¹⁵⁴ combinations

This exceeds any realistic exhaustive attack capacity.

Segment Generation & Protection

Each segment is:

- Randomly generated by a cryptographically secure RNG

- Independent from other segments

- Encrypted at rest when stored

This is not “splitting a master key.”

Each segment has full entropy (256 bits each in this example). Even alone, a segment remains computationally infeasible to guess.

RAM-Only Encryption & Decryption

In the PassCypher architecture, encryption and decryption occur exclusively in volatile memory (RAM), only for the minimal time required for auto-login.

This means:

- No clear secret is written to disk

- No decrypted data is persisted

- Containers stay encrypted at rest at all times

- The full key exists only temporarily in memory

This holds across:

- Android smartphones via NFC

- Windows

- macOS

Once the operation ends:

- Memory is released

- The concatenated key disappears

- The container remains encrypted

In cloud models, critical elements transit server layers and remote sync. In PassCypher, the secret exists in clear only in volatile memory, only for the action duration.

International Patent Protection — Segmented-Key Authentication

The segmented-key authentication architecture used by PassCypher HSM PGP is protected by an international patent.

It covers:

- Independent randomly generated key segments

- Separate protected storage

- Local dynamic assembly via concatenation

- No centralized master key

- No server dependency or SSO

The mechanism is not identity-driven. It is possession-and-combination driven.

Patent protection does not cover AES-256-CBC itself (an open standard). It covers the segmented authentication architecture and its offline operating model.

Masterless Architecture

The PassCypher model relies on:

- No centralized master key

- No password-derived global key

- No server negotiation

- No central authorization database

Without the correct set of segments:

- The full key cannot be reconstructed

- The container remains permanently encrypted

- No partial exploitable secret is exposed

Centralization becomes a voluntary construct based on user-controlled segmented keys, protected at rest. This eliminates downgrade surfaces tied to server-side negotiation.

Official References on KDF & Zero-Knowledge Governance

- RFC 8018 — PKCS #5 v2.1 (PBKDF2)

- RFC 9106 — Argon2 Memory-Hard Function

- NIST SP 800-63B — Digital Identity Guidelines

- Bitwarden Security Whitepaper

- LastPass Technical Whitepaper

- Dashlane Security Architecture

- ETH Zurich — Information Security Group

- Università della Svizzera italiana (USI)

These sources confirm the risk lies in versioning and parameter governance—not in “broken primitives.”

Doctrinal Evolution of the Zero-Knowledge Model

For a decade, the promise sounded simple: the server never holds the key.

Today the question is tougher: Can the server influence the parameters that determine real-world strength?

This shift is observable in recent technical literature: the debate no longer focuses only on whether the server can see the key, but whether it can influence the cryptographic floor via negotiable parameters.

In parallel, the industry hardens toward memory-hard Argon2id and stronger client-side enforcement. Effective resilience increasingly depends on a non-negotiable cryptographic floor.

This evolution shifts the center of gravity:

- From encryption to parameter governance

- From marketing to implementation discipline

- From promise to enforceable invariance

A zero-knowledge model becomes truly sovereign when:

- Minimum parameters are non-negotiable

- Backward compatibility permits no degradation

- Architecture prevents any external influence on derivation

Strategic Perspective: Beyond “Vulnerable Zero-Knowledge”

Zero-knowledge is not an absolute guarantee. It is a conditional trust model.

A “vulnerable zero-knowledge” finding is not a cryptographic failure. It is a consequence of architectural choices and compatibility constraints.

As long as a server can influence cryptographic parameters, resilience depends on implementation discipline.

Cryptographic sovereignty begins when:

- Downgrade negotiation becomes impossible.

- The cryptographic floor is non-negotiable.

- The secret never exists outside user control.

The debate is not “encrypted vs not encrypted.”

It is “negotiable governance vs sovereign architecture.”

Key Takeaways

- A vulnerable zero-knowledge outcome is not broken encryption—it is backward compatibility debt.

- The risk appears only under an active server compromise assumption.

- KDF governance becomes a strategic security issue.

- Removing downgrade negotiation improves resilience.

- A no-server-dependency architecture eliminates downgrade surfaces by design.

Glossary

Zero-knowledge

Core definition

A security model where the provider never holds the decryption keys. Cryptographic operations occur client-side.

Vulnerable zero-knowledge

Architectural qualification

A situation where zero-knowledge remains mathematically sound but becomes structurally exposed due to backward compatibility or downgrade-capable parameter negotiation.

Downgrade attack

Attack mechanism

Forcing the use of older, weaker protocol versions or parameters to reduce brute-force resistance or enable cheaper offline attacks.

KDF (Key Derivation Function)

Derivation function

An algorithm that converts a password into a cryptographic key using tunable parameters (iterations, memory, parallelism). Security depends directly on those parameters.

PBKDF2

Legacy standard

A standardized key derivation function based on repeated iterations. Its security depends heavily on iteration count.

Argon2id

Modern standard

A memory-hard key derivation function designed to resist GPU/ASIC attacks through controlled memory cost.

Cryptographic backward compatibility

Version constraint

Maintaining support for older parameters or configurations to preserve access for legacy accounts—sometimes creating downgrade surfaces.

Downgrade negotiation

Degradation path

A client accepting parameters below a modern minimum floor, potentially opening an attack surface.

Active server compromise

Threat scenario

An attacker manipulates server responses to influence client cryptographic behavior.

Sovereign architecture

Independence principle

Secrets, keys, and parameters remain fully user-controlled, with no negotiable server dependency.

Segmented keys

Authentication mechanism

Independent random key segments assembled locally in memory to avoid a centralized master key.

FAQ — Vulnerable Zero-Knowledge

Is zero-knowledge broken by a downgrade attack?

No — zero-knowledge isn’t “broken”.

Encryption primitives remain intact. The risk appears when backward compatibility lets a compromised server enforce historically weaker KDF parameters that the client still accepts.

What is a downgrade attack in a password manager?

How it works

It forces older cryptographic parameters to reduce brute-force cost. It requires active server compromise or manipulation of API responses.

Are all zero-knowledge password managers affected?

No — scope matters.

This analysis covers specific implementations. Risk depends on architecture, parameter enforcement, and threat model.

Why does backward compatibility become an attack surface?

The hidden cost of continuity

Supporting legacy vaults can preserve weaker parameters. If they remain activatable, they form downgrade surfaces under active compromise.

What is “parameter governance” in practical terms?

Governance means enforceability.

Who sets minimum floors? Who can lower them? Is the client allowed to reject? Governance turns math into operational security.

Does this mean PBKDF2 is insecure?

Not as a standardized key derivation function.

PBKDF2 security is parameter-dependent. Weak iteration counts and downgrade paths create risk, not PBKDF2 itself.

Why does Argon2id reduce downgrade risk?

Memory-hard cost matters.

Argon2id forces attackers to pay memory cost. But it still needs strict minimum settings and client enforcement to stop downgrades.

What should users check first?

Fast checks

Verify KDF type and settings (PBKDF2 iterations, Argon2id configuration). Update legacy accounts and use a strong master password.

Is this a mass exploitation event?

No public mass exploitation is documented.

This is a post-compromise threat model and typically targeted rather than opportunistic.

How can vendors eliminate downgrade surfaces?

Make floors non-negotiable.

Enforce strict minimum parameters client-side, disable legacy schemes, and prevent server-controlled negotiation.

Why do no-negotiation architectures fall outside this perimeter?

Design removes the attack class.

If keys are assembled locally and no server can influence parameters, downgrade negotiation cannot occur.

What does “cryptographic sovereignty” mean here?

Sovereignty is control over the floor.

It means the user controls secrets and parameters, and architecture prevents external degradation.